ASO Overview

ASO is Essbase's alternative to the sometimes cumbersome BSO method of storing data in an Essbase database. In fact, it is BSO that is exactly what makes Essbase a superior OLAP analytical tool but it is also the BSO that can occasionally be a detriment to the level of system performance demanded in today's business world.

In a BSO database, all data is stored, except for dynamically calculated members. All data consolidations and parent-child relationships in the database outline are stored as well. While the block storage method is quite efficient from a data to size ratio perspective, a BSO database can require large amounts of overhead to deliver the retrieval performance demanded by the business customer.

The ASO database efficiently stores not only zero level data, but can also store aggregated hierarchical data with the understandings that stored hierarchies can only have the no-consolidation (~) or the addition (+) operator assigned to them and the no-consolidation (~) operator can only be used underneath Label Only members. Outline member consolidations are performed on the fly using dynamic calculations and only at the time of the request for data. This is the main reason why ASO is a valuable option worth consideration when building an Essbase system for your customer.

Because of the simplified levels of data stored in the ASO database, a more simplified method of storing the physical data on the disk can also be used. It is this simplified storage method which can help result in higher performance for the customer.

Your choice of one database type over the other will always depend on balancing the customer's needs with the server's physical capabilities, along with the volume of data. These factors must be given equal consideration.

Creating an aggregate storage Application|Database

Creating an ASO Essbase application and database is as easy as creating a BSO application and database. All you need to do is follow these simple steps:

1. Right-click on the server name in your EAS console for the server on which you want to create your ASO application.

2. Select Create application | Using aggregate storage as shown in the following screenshot:

Hierarchy Dimensions in ASO

Hierarchies

Dimensions can have one or more hierarchies of the members. Included with your installation of Essbase, you get the sample ASO application called ASOsamp to use as a guide when learning about ASO.

In ASO, there are two types of hierarchies:

· Stored hierarchies

· Dynamic hierarchies

Dimension can be a Stored & dimension hierarchy

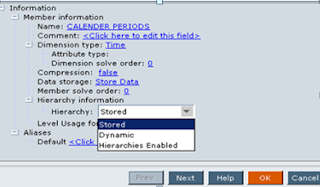

1. Some dimension can have both Stored & dimension hierarchy in such scenarios multiple dimensions enable Right click on the member ->click on edit properties -> On the Member Properties screen, under the Hierarchy section, select Hierarchies Enabledfrom the Hierarchy list box: Stored.

· Time dimension:QTD YTD can’t be enabled in time period to enable this we will enable the alternate hierarchy shared member

MTD:Jan start till Jul

MTD:Jan start till Jul

Jan | Feb |March

Static Dimension

|

Dynamic Dimension

|

Static Dimension:aggregation used are only “+” ,No other Consolidation ‘s like “MINUS” are used

Parent has to be label only then only we can tag that as no consolidation other wise it will have + consolidation where A is the parent and xyz the children

It cant’ have member formula

|

Dynamic dimension which have dynamic hierarchy can have member formula

It can have any consolidation

|

To enable multiple hierarchies, follow these steps:

Right-click on the dimension or member name you wish to enable the multiple hierarchies on. Click on Edit member properties….

On the Member Properties screen, under the Hierarchy section, select Hierarchies Enabledfrom the Hierarchy list box: Stored

Stored hierarchies

Using Stored hierarchies, the data is aggregated based on the outline structure. The data aggregation and data retrieval is faster. There are a few restrictions when using Stored hierarchies:

· Stored hierarchies cannot have member formulas.

· Stored hierarchies can have the no-consolidation (~) operator for Label-only members. A member that is label only is merely in the outline as a place holder or to be informational and does not store data. A good example of this is our Calendar Periods dimension. While the root member Calendar Periods is useful to have for information, the data would make no sense rolled up to this level.

Dynamic hierarchies

Using Dynamic hierarchies, the data is not aggregated but is calculated at the time of the data retrieval. Since the data is calculated at the time of retrieve, the response time for the output is longer. The account dimension is always tagged as Dynamic hierarchies and you cannot change the account dimension to stored hierarchy. The advantages of the Dynamic hierarchies are:

· Dynamic hierarchies can have formulas

· Dynamic hierarchies can have any consolidation operator

The following screenshot shows an example of how the Dynamic and Stored hierarchies are used in the sample ASO database. In the sample ASO database's case, you can see that the Time dimension hasMultipleHierarchies Enabled:

Outline paging

This is one major difference between a Block Storage application and an Aggregate Storage application that provides a noticeable boost in performance. Unlike a BSO application, where the database outline must be loaded into memory as a single element, the database outlines in ASO databases are created and stored in what can be considered a page-able format. This means that instead of Essbase loading the entire database outline into memory, the page-able outline can be loaded into memory either one page or section at a time. This can free up resources and can help make data retrieval and data aggregations faster by reducing the amount of memory consumed by large database outlines.

Aggregation

There is no need for complex calculation scripts in an ASO database. Now, you may be wondering how the data gets aggregated without performing any calculated aggregation? How fast will my data get retrieved? In an ASO database, the data gets loaded only into the level 0 (leaf node) cells. When the user attempts to retrieve data at a level higher than the zero level, the data is dynamically aggregated. Also, remember a good portion of this aggregated data is not physically stored in the database. As the database size increases, the dynamic aggregation consumes more time. In order to improve the database performance, you may need to pre-aggregate the data.

MDX query language

It’s just a piece of the MaxL scripting language we haven't gone over with you yet.

You may recall how we've gone over the MaxL scripting language previously. Well, the MaxL scripting language actually has two pieces. These MaxL pieces are known as MaxL DDL for Data Definition Language, which is the piece you are already familiar with and MaxL MDX for Multidimensional Expressions.

Why have we not explained something like MDX in-depth already? The reason is that while the DDL piece of MaxL contains many powerful functions that are written in relatively easy to understand syntax and it can easily replace the Essbase Command scripting language (EssCmd) as your primary tool for automating database maintenance and support processes, the MDX piece of MaxL is more of a data querying language. Yes, MDX is very powerful as is DDL, but its usefulness can be debated since there are several other methods of querying data in Essbase that are just as effective and easier or more convenient to use. The Essbase Reports scripting language with the aid of the Essbase Query Designer, is one example that comes to mind as an effective data querying tool. MDX also supports the XML for Analysis (XMLA) API.

MDX however, has its place and its place is where it is best suited. The place for MDX is querying ASO Essbase databases.

http://docs.oracle.com/cd/E12032_01/doc/epm.921/html_techref/maxl/dml/calc2mdx.htm

Basically it is same as for a BSO application.

To create an ASO application and database, you follow virtually the same steps as you do to create a BSO application and database. However, there are some important differences .

· A BSO database outline can be converted into an Aggregate Storage database outline, but an Aggregate Storage database outline cannot be converted into a Block Storage database outline.

Steps to convert a BSO application into an ASO application:

Steps to convert a BSO application into an ASO application:

1. Open the BSO outline that you wish to convert, select the Essbase database and click on theFile | Wizards | Aggregate Storage Outline Conversion option. You will see the first screenSelect Source Outline. The source of the outline can be in a file system or on the Essbase Server. In this case, we have selected the OTL from the Essbase Server and then click Next as shown in the following screenshot:

Differences between ASO &BSO

ASO

| |

· ASO Aggregation is faster

· There is no write back capability till 9 version.

· As per 9.3.1 un documented version we can perform lock & send by using the transparent partition of BSO as source and ASO being the target.

· 9.3.1 no write back on aso

· Transparent partition - from bso source ) would reflect in aso

Source:BSO Target :ASO

· We can’t convert ASO-BSO Cube

· ASO cubes are used when there are more than 10 dimensions mostly they are sparse dimensions.

· We can’t lock and send from ASO

· Formula’s can’t be stored in ASO

· EPM 11 Suite ASO Features

static dimension cant’ have member formula

· Dynamic dimension which have dynamic hierarchy can have member formula

· It doesn’t support calc scripts

· ASO cube can be backed up ARBORPATH/APP/Name

· It has Sparse dimensions

· It is used only used for Reporting

· Aggregate storage databases enable dramatic improvements in both :

· Database aggregation time and dimensional scalability.

· The aggregate storage kernel is an alternative to the block storage kernel. Aggregate storage databases typically address read-only, “rack and stack” applications that have large dimensionality.

· Plannning doesn't support ASO

· Not suitable for Budgeting and forecasting

· ASO does not allow you to preview the outline changes

· The concept of dimensional precedence is known as solve order.

|

· BSO Cubes has write back capability users can input numbers and they can perform lock &send to the essbase and retrieve the data Supports calc scripts

· BSO-ASO Conversion is possible

· BSO has dense dimensions

· BSO application has load rules, partitions etc.

· It has more of Dense dimensions

· Hyperion Planning uses only BSO cube and this sits on top of Essbase

· It is used for Reporting,Forecasting & budgeting.

· BSO Two –pass calculation

· To get the desired out put .

· No Iteration process is involved in 2 pass as it calculates first from 1

|

SOLVE ORDER USAGE:

The value of the solve order property determines the priority with which Essbase calculates the

formulas. The formulas on the members that have a specified solve order are calculated in order

from the lowest solve order to the highest.You can specify a solve order between 0 and 127. The default is 0.

You can specify the solve order at the member level or at the dimension level.

Setting the solve order for each calculated member is recommended when you use multiple

calculated members to create ratios or custom totals.

Essbase uses the following information to define calculation precedence:

1. Member solve order

2. Dimension solve order (members without formulas for which you do not specify a member

solve order inherit the solve order of their dimension. Members with formulas for which

you do not specify a member solve order have a solve order of zero.)

If multiple members have the same solve order, the members are evaluated in the reverse

order in which their dimensions occur in the database outline. The member that occurs later

in the outline takes precedence.

The tie situation calculation order is different for calculated members defined in an MDX

query for block storage databases. See the Oracle Essbase Technical Reference.

Note: When a member formula is dependant on the value of another member, the member

with the formula must have a higher solve order than the member or members on

which it depends. For example, in the ASOsamp.Sample database outline in

Avg Units/Transaction depends on the value of Units and

of Transactions. Avg Units/Transaction must have a higher solve order than Units

and Transactions.

Inherit Differences between ASO & BSO

Data Load Differences

Difference between Essbase 7.x\9.x\EPM 11

Essbase ASO Limitations in 7.x

|

New ASO Features 9.3

New ASO Features 9.0 ,9.2

|

EPM 11

|

· Level 0 Data Loads only

· Data Load Performance

· No Time Balancing

· No Write Back

· No Complexity Calculations

|

· Improved Restructuring

· Data base outline can be changed

· Ability to export level 0 data

· Kernel Improvements(Data base compression &De hierarchy)

· Support for duplicate member names

· Extended Substitutional variable support

· Essbase 9.3 Release

New ASO Features 9.3

-Time Intelligence

- Time Balance

-Intelligent aggregations

-Dynamic ranking

Essbase 9.3.1

New ASO Features 9.3.1

-Concurrent Loads(multiple load buffers on ASO Cube)

-Incremental Loads(Streams /tickled feeds)

-Query Cancellation

-Write Back to level zero(Not documented on 11.1.1)

|

Partial Data clear

ASO Partial Data Clear is a new option that enables you to clear a well defined region of the ASO Cube

Two types of clear region are available-

v Physical clear of cells in region

v Logical Clear of cells in a region

Can be achieved by MDX Script.

Syntax.

1.alter database <appname>.<dbname> clear data in region '{MDX Set Expression}' [physical]

2.alter database ASOSamp.Sample clear data in region '{[Jan]}';

ASO PARTIAL DATA CLEAR BENEFITS

v A portion of cube can be erased with out affecting other data areas.

v Data can be cleared by setting the values to 0 can be removed with a logical clear ,which is faster.

v Data that must be permanently erased can be fully removed with a physical clear which is slow.

ü Target of Partition

Previously in version 9.3.1

v Full support only for BSO Partioning

v ASO Could only be the source of a transparent partition

NEW FEATURE IN 11.1.1

v ASO Can be the source of a transparent partition

v BSO Can be used for complex calculations

v ASO Can be used for aggregation performance benefits.

v New Calculation Scripts

v New MDX Functions

v Write back to level zero (Fully Documented)

ASO TARGET PARTITION BENEFITS

· Vertical portioning

v Consolidate Multiple cubes into target.

v Target provides unified view into multiple source cubes.

· Measures with different granularity

v Overcomes limitation of write back to upper levels.

v Cell can be a level 0

· Member in the source,but an upper level member in the Target

· New Calc script functions

· New Functions list

· New MDX Functions

v Julian date(Date)

v Converts a given unix date to Julian equivalent

v UnixDate(date)

v -Converts a given julian date to its Unix Equivalent

v GetNextDay(Date)

v -converts a given date to the next weekday date

|

Block

- A Block is the basic unit of storage in essbase

- A cell is created for every intersection of stored members in the dense dimensions.

- Each cell takes 8 bytes, and ideal size of block is 10k to 100k

- Data block is created as long as one cell has a data value.

- Ex : Dense Data Block for Sample Basic Database

Data block

- A data block is comprised of the intersection of stored members from all the dense dimensions

Calculate block size and potential number of blocks

Block size It is the multiplication of stored members of all dense dimensions times 8 ( 8*N )

Potential number of blocks :It is the multiplication of all stored members of all sparse dimensions.

When you run a calc all - the entire database will calculate.

All stored parent values will be recalculated and stored.

Hence the increase in your index and page files.

There are many factors that can contribute to database size and calculation times.

First - your dense/sparse settings.

In most typical financial applications - both time and accounts are dense.

The block size should be around 160kb.

You get the block size by multiplying the number of members in your dense dimensions by 8 kb. So - if you have 19 mbrs (12 mths + 4 qtrs + total year + beg balance) in your time dimension and 1000 accts - then your block size would be 152kb.

Many times applications are separated based upon accounts (like P&L in one cube, and Balance Sheet in another.)

If your app/db is not financially oriented you'll want to test what dense/sparse settings give you the best density level in your block.

Second - review the optimizing calculations section of the essbase database admin guide.

There are several server config (LOCKBLOCK etc.) and database (index cache, etc.) settings

you will want to change to allow Essbase to leverage memory for the calculation.

Lastly - never do a "CALC ALL".

Take a few minutes to look at the Essbase Technical Reference (from the document map html) - after 10+ yrs of working with Essbase I still look at this every week.

Do a fix to limit to just the say the Year and Version you wish to calculate after a fresh data load, the do a "CALC DIM".

Also - you need not calculate any dimensions that are flat without any consolidation point.

If you do not have any member formulas you need to calculate - and you are only calculating sparse dimensions - you can do an "AGG".

Block Categories

ü Non input level blocks

ü Level 0 blocks

ü Upper Level blocks

Tuning

Outline Optimization

There are several ways to optimize load

1. Grouping of Sparse member combinations

2. Making the data source as small as possible

3. Making source fields as small as possible

4. Positioning the data in the same order as the outline

5. Loading from Essbase Server

6. Managing parallel data load processing

Calculation Optimization

What are the design considerations for calculation optimization?

You can configure a database to optimize calculation performance. The best configuration for the site depends on the nature and size of the database.

· Block Size(8Kb to 100Kb) and Block Density

· Order of Sparse Dimensions

· Incremental Data Loading

· Database Outlines with Two or More Flat Dimensions

· Formulas and Calculation Scripts

When does Fragmentation occur?

Fragmentation is likely to occur with the following:

· Read/write databases that users are constantly updating with data

· Databases that execute calculations around the clock

· Databases that frequently update and recalculate dense members

· Data loads that are poorly designed

· Databases that contain a significant number of Dynamic Calc and Store members

· Databases that use an isolation level of uncommitted access with commit block set to zero

How can you measure fragmentation?

You can measure fragmentation using the average clustering ratio or average fragmentation Quotient.

Using the average fragmentation quotient

Any quotient above the high end of the range indicates that reducing fragmentation may help performance

Small (up to 200 MB) 60% or higher

Medium (up to 2 GB) 40% or higher

Large (greater than 2 GB) 30% or higher

Using the average clustering ratio:

The average clustering ratio database statistic indicates the fragmentation level of the data (.pag) files. The maximum value, 1, indicates no fragmentation.

How do you can prevent and remove fragmentation?

You can prevent and remove fragmentation:

· To prevent fragmentation, optimize data loads by sorting load records based upon sparse dimension members. For a comprehensive discussion of optimizing data load by grouping sparse members.

· To remove fragmentation, perform an export of the database, delete all data in the database with CLEARDATA, and reload the export file.

· To remove fragmentation, force a dense restructure of the database.

Database restructuring

Why is database restructuring?

As your business changes, you change the Essbase database outline to capture new product lines, provide information on new scenarios, reflect new time periods, etc. Some changes to a database outline affect the data storage arrangement, forcing Essbase to restructure the database.

Types of database restructuring

What are the types of database restructuring?

The two ways by which a database restructure is triggered:

Conditions affecting Database restructuring

Conditions affecting Database restructuring?

Intelligent Calculation, name changes, and formula changes affect database restructuring:

· If you use Intelligent Calculation in the database, all restructured blocks are marked as dirty whenever data blocks are restructured. Marking the blocks as dirty forces the next default Intelligent Calculation to be a full calculation.

· If you change a name or a formula, Essbase does not mark the affected blocks as dirty. Therefore, you must use a method other than full calculation to recalculate the member or the database.

Files used during Restructuring

When Essbase restructures both the data blocks and the index, it uses the files described

essxxxxx.pag Essbase data file

essxxxxx.ind Essbase index file

dbname.esm Essbase kernel file that contains control information used for db recovery

dbname.tct Transaction control table

dbname.ind Free fragment file for data and index free fragments

dbname.otl Outline file in which is defined all metadata for a database and how data is stored

Actions that improve performance for restructuring

What are the actions that improve performance for restructuring?

There are a number of things you can do to improve performance related to database restructuring:

· If you change a dimension frequently, make it sparse. · Use incremental restructuring to control when Essbase performs a required database restructuring. · Select options when you save a modified outline that reduce the amount of restructuring required.

Which restructure operations are faster?

These types of restructure operations are listed from fastest to slowest:

Essbase initiates an implicit restructure of the database files after an outline is changed using Outline Editor or Dimension Build. The type of restructure that is performed depends on the type of changes made to the outline

What is Explicit Restructures?

When you manually initiate a database restructure, you perform an explicit restructure. An explicit restructure forces a full restructure of the database. A full restructure comprises a dense restructure plus removal of empty blocks.

What is Dense restructure?

If a member of a dense dimension is moved, deleted, or added, Essbase restructures the blocks in the data files and creates new data files. When Essbase restructures the data blocks, it regenerates the index automatically so that index entries point to the new data blocks.

Empty blocks are not removed. Essbase marks all restructured blocks as dirty, so after a dense restructure you need to recalculate the database.

What is Sparse restructure?

If a member of a sparse dimension is moved, deleted, or added, Essbase restructures the index and creates new index files.

Restructuring the index is relatively fast; the amount of time required depends on the size of the index.

What is Outline-only restructure?

If a change affects only the database outline, Essbase does not restructure the index or data files.

Member name changes, creation of aliases, and dynamic calculation formula changes are examples of changes that affect only the database outline.

Dense Restructure Process

To perform a dense restructure, Essbase does the following:

Sparse Restructure Process

When Essbase does a sparse restructure (restructures just the index), it uses the following files:· essxxxxx.ind· dbname.otl· dbname.esm

Data compression

Essbase allows you to choose whether data blocks that are stored on disk are compressed, as well as which compression scheme to use. When data compression is enabled, Essbase compresses data blocks when it writes them out to disk. Essbase fully expands the compressed data blocks, including empty cells, when the blocks are swapped into the data cache.

Generally, data compression optimizes storage use. You can check compression efficiency by checking the compression ratio statistic.

Types of data compression

Essbase provides several options for data compression:

1. Bitmap compression, the default. Essbase stores only non-missing values and

uses a bitmapping scheme. A bitmap uses one bit for each cell in the data block, whether the cell value is missing or non-missing. When a data block is not compressed, Essbase uses 8 bytes to store every non-missing cell. In most cases, bitmap compression conserves disk space more efficiently. However, much depends on the configuration of the data.

2. Run-length encoding (RLE). Essbase compresses repetitive, consecutive values --any value that repeats three or more times consecutively, including zeros and #MISSING values. Each data value that is repeated three or more times uses 8 bytes plus a 16 byte repetition factor.

3. zlib compression. Essbase builds a data dictionary based on the actual data being compressed. This method is used in packages like PNG, Zip, and gzip. Generally, the more dense or heterogeneous the data is, the better zlib will compress it in comparison to bitmap or RLE compression.

4. Index Value Pair compression. Essbase applies this compression if the block density is less than 3%.Index Value Pair addresses compression on databases with larger block sizes, where the blocks are highly sparse. zlib does not use this.

5. No compression. Essbase does not compress data blocks when they are written to disk

RLE Compression Usage

When do you use RLE over Bitmap Compression?

Use RLE over Bitmap When,

Average block density very low (< 3%).

Database has many consecutive repeating Values.

Data compression disable procedure

When to disable compression?

You may want to disable data compression if blocks have very high density (90% or greater) and have few consecutive, repeating data values. Under these conditions, enabling compression consumes resources unnecessarily. Don't use compression if disc space/memory is not an issue compared to your application. It can become a drain on the processor.

Data locks

Essbase issues write (exclusive) locks for blocks that are created, updated, or deleted, and issues read (shared) locks for blocks that should be accessed but not modified. By issuing the appropriate locks, Essbase ensures that data changed by one operation cannot be corrupted by a concurrent update.

Ans: UDA's values are never displayed in the reports and hence do not impact report performance.

How does Attribute dim impact report performance?

Ans: They highly impact the report performance as the attributes are calculated dynamically when referenced in the report. For very large number of att dim displayed in the report, the performance could drastically reduce.

While loading the data, you have applied both the selection criteria as well as rejection criteria to a same record. What will be the outcome.

Ans: The record will be rejected.

How is data stored in the Essbase database?

Ans: Essbase is an file based database where the data is stored in PAG files of 2 GB each and grows sequentially.

Index file

An index entry is comprised of the intersection of exactly one member from each sparse dimension

Page file

A 2Gig of an index entry and a block comprises a page file

Transaction

What is a transaction?

When a database is in read/write mode, Essbase considers every update request to the server (such as a data load, a calculation, or a statement in a calculation script) as a transaction.

Transaction control file

What is transaction control file?

Essbase tracks information about transactions in a transaction control file (dbname.tct).

The transaction control file contains an entry for each transaction and tracks the current state of each transaction (Active, Committed, or Aborted).

Isolation level &Types of isolation levels

What is isolation level and what are the types of isolation levels?

Isolation levels determine how Essbase commits data to disk. Essbase offers two isolation levels for transactions --committed access and uncommitted access (the default).

What is commited access?

When data is committed, it is taken from server memory and written to the database on disk. Essbase automatically commits data to disk. There are no explicit commands that users perform to commit data blocks.

Committed and Uncommitted access?

Committed:

Committed at the end of a transaction. Data retained till then.

All blocks in question locked.

Pre-Image Access: If enabled, Read only access allowed

Wait Times:

Indefinite

Immediate Access or no Wait

No. of Seconds Specified

Uncommitted:

Committed only at synchronization points.

Block by Block Locks.

Commit Row: No of rows of data loaded when Sync point occurs.

Commit Block: No. of Blocks Modified when Sync Point occurs.

For Rollback, Commit Row=0 and Commit Block=0

Advantages and disadvantages of using committed access

You can optimize data integrity by using committed access.

Setting the isolation level to committed access may increase memory and time requirements for database restructure.

Transaction that is always in committed mode

The Spreadsheet Add-in lock and Send and the Grid API are always in Committed Access Mode

What are the memory caches used by Essbase to coordinate memory usage? Essbase uses five memory caches to coordinate memory usage 1. Index Cache 2. Data File Cache 3. Data Cache 4. Calculator Cache 5. Dynamic Calculator Cache

Index cache

The index cache is a buffer in memory that holds index pages. How many index pages are in memory at one time depends upon the amount of memory allocated to the cache.

Data file cache

The data file cache is a buffer in memory that holds compressed data files (.pag files). Essbase allocates memory to the data file cache during data load, calculation, and retrieval operations, as needed. The data file cache is used only when direct I/O is in effect.

Data cache

The data cache is a buffer in memory that holds uncompressed data blocks. Essbase allocates memory to the data cache during data load, calculation, and retrieval operations, as needed.

Calculator cache

The calculator cache is a buffer in memory that Essbase uses to create and track data blocks during calculation operations.

Dynamic calculator cache

The dynamic calculator cache is a buffer in memory that Essbase uses to store all of the blocks needed for a calculation of a Dynamic Calc member in a dense dimension (for example, for a query).

Memory caches used by Essbase to coordinate memory usage

Essbase uses five memory caches to coordinate memory usage

Index Cache: Min -1024 KB (1048576 bytes) Default - Buffered I/O : 1024 KB (1048576 bytes);Direct I/O : 10240 KB (10485760 bytes) Opt -Combined size of all essn.ind files, if possible; as large as possible otherwise.Do not set this cache size higher than the total index size, as no performance improvement results.

Data File Cache: Min - Direct I/O: 10240 KB(10485760 bytes) Default -Direct I/O: 32768 KB(33554432 bytes) Opt -Combined size of allessn.pag files, if possible; otherwise as large as possible.This cache setting not used if Essbase is set to use buffered I/O.

Data Cache:Min - 3072 KB (3145728 bytes) Default - 3072 KB (3145728 bytes) Opt -0.125 * the value of data file cache size.

Calculator Cache:Min - 4 bytes Max: 200,000,000 bytes Default - 200,000 bytes Opt -The best size for the calculator cache depends on the number and density of the sparse dimensions in your outline. The optimum size of the calculator cache depends on the amount of memory the system has available.

Essbase alias tables provide a nice way for users in different countries, speaking different languages, to share and understand the same member names in their native tongue. This can be very valuable in gaining the user acceptance you need for your new cube since people will feel more comfortable with something they understand.

An Essbase cube may contain up to ten different alias tables so there's a lot of flexibility available. There are two ways to create alias tables:

1.Create an empty alias table and then populate the members manually

2.Import an alias table from a source file which contains the desired member names

Essbase Tuning

What is Hour glass model in essbase

This is used for performance tuning \optimize performance

v It can be done by checking the blocksize

v Dense sparse dimension settings

v It depends on the member size there shouldn’t be any special characters( % !)

v Data cache :No of blocks should not be exceeded

v Outline performance

v Report scripts performance

v Calcscripts performance

v Restructuring performance(Dense)

The hour glass model is described implicit with the calculation order. Essbase calculates block by block - taking the entire block into memory.

Taking a block into memory is a resource intensive operation which is subject of tuning. A similar resource intensive action is searching for blocks. Almost all databases will be aggregated over different dimensions (mostly large and sparse) which takes most of the time during a calculation.

Taking these 3 together and the calculation order that is build into Essbase, minimizing the swapping from one dimension to the other is very important. Therefore the last dimension should be the largest sparse dimension.

Taking a block into memory is a resource intensive operation which is subject of tuning. A similar resource intensive action is searching for blocks. Almost all databases will be aggregated over different dimensions (mostly large and sparse) which takes most of the time during a calculation.

Taking these 3 together and the calculation order that is build into Essbase, minimizing the swapping from one dimension to the other is very important. Therefore the last dimension should be the largest sparse dimension.

For Block Storage the recommendation is hour glass design. There are variations on this, and some times an hour glass design is not optimal. It really depends on the individual database and you have to test different configurations to yield the best results.

One popular variation on the hour glass is to have Largest Dense to Smallest Dense, then Smallest aggregating sparse to largest aggregating sparse, then non-aggregating sparse dimensions. Many times this config will yield better performance than traditional hour glass, but this needs to be tested.

One popular variation on the hour glass is to have Largest Dense to Smallest Dense, then Smallest aggregating sparse to largest aggregating sparse, then non-aggregating sparse dimensions. Many times this config will yield better performance than traditional hour glass, but this needs to be tested.

Because the calculation is done from the top down unless you choose the opposite, so when you do calculation it's best to have the the outline in this order:

1- Dimension tagged: Accounts

2- Dimension tagged: Time

3- Densest dimensions

4- Least dense dimensions

5- Least Sparse aggregating dimensions

6- Most Sparse aggregating dimensions

7- Non aggregating Sparse dimensions

This format represents the hour-glass which gives best performance most of the time.

1- Dimension tagged: Accounts

2- Dimension tagged: Time

3- Densest dimensions

4- Least dense dimensions

5- Least Sparse aggregating dimensions

6- Most Sparse aggregating dimensions

7- Non aggregating Sparse dimensions

This format represents the hour-glass which gives best performance most of the time.

Outline Optimization

How do you optimize outline?

Usually the outline is optimized using the hourglass design for dimension ordering i.e,

· Dimension with Accounts tag

· Dimension with Time tag

· Largest Dense dimension

· Smallest dense dimension

· Smallest Sparse dimension

· Largest Sparse dimension

· Dimension with Accounts tag

· Dimension with Time tag

· Largest Dense dimension

· Smallest dense dimension

· Smallest Sparse dimension

· Largest Sparse dimension

Ways to improve performance

What are the ways to improve performance during data loads? There are several ways to optimize load

1. Grouping of Sparse member combinations

2. Making the data source as small as possible

3. Making source fields as small as possible

4. Positioning the data in the same order as the outline

5. Loading from Essbase Server

6. Managing parallel data load processing

Calculation Optimization

What are the design considerations for calculation optimization? You can configure a database to optimize calculation performance. The best configuration for the site depends on the nature and size of the database.

· Block Size(8Kb to 100Kb) and Block Density

· Order of Sparse Dimensions

· Incremental Data Loading

· Database Outlines with Two or More Flat Dimensions

· Formulas and Calculation Scripts

When does Fragmentation occur?

Fragmentation is likely to occur with the following:

· Read/write databases that users are constantly updating with data

· Databases that execute calculations around the clock

· Databases that frequently update and recalculate dense members

· Data loads that are poorly designed

· Databases that contain a significant number of Dynamic Calc and Store members

· Databases that use an isolation level of uncommitted access with commit block set to zero

How can you measure fragmentation?

You can measure fragmentation using the average clustering ratio or average fragmentation Quotient.

Using the average fragmentation quotient

Any quotient above the high end of the range indicates that reducing fragmentation may help performance

Small (up to 200 MB) 60% or higher

Medium (up to 2 GB) 40% or higher

Large (greater than 2 GB) 30% or higher

Using the average clustering ratio:

The average clustering ratio database statistic indicates the fragmentation level of the data (.pag) files. The maximum value, 1, indicates no fragmentation.

How do you can prevent and remove fragmentation?

You can prevent and remove fragmentation:

· To prevent fragmentation, optimize data loads by sorting load records based upon sparse dimension members. For a comprehensive discussion of optimizing data load by grouping sparse members.

· To remove fragmentation, perform an export of the database, delete all data in the database with CLEARDATA, and reload the export file.

· To remove fragmentation, force a dense restructure of the database.

Database restructuring

Why is database restructuring? As your business changes, you change the Essbase database outline to capture new product lines, provide information on new scenarios, reflect new time periods, etc. Some changes to a database outline affect the data storage arrangement, forcing Essbase to restructure the database.

Types of database restructuring

What are the types of database restructuring? The two ways by which a database restructure is triggered:

- Implicit Restructures

- Dense restructure

- Sparse restructure

- Outline-only restructure

- Explicit Restructures

Conditions affecting Database restructuring

Conditions affecting Database restructuring? Intelligent Calculation, name changes, and formula changes affect database restructuring:

· If you use Intelligent Calculation in the database, all restructured blocks are marked as dirty whenever data blocks are restructured. Marking the blocks as dirty forces the next default Intelligent Calculation to be a full calculation.

· If you change a name or a formula, Essbase does not mark the affected blocks as dirty. Therefore, you must use a method other than full calculation to recalculate the member or the database.

Files used during Restructuring

When Essbase restructures both the data blocks and the index, it uses the files describedessxxxxx.pag Essbase data file

essxxxxx.ind Essbase index file

dbname.esm Essbase kernel file that contains control information used for db recovery

dbname.tct Transaction control table

dbname.ind Free fragment file for data and index free fragments

dbname.otl Outline file in which is defined all metadata for a database and how data is stored

Actions that improve performance for restructuring

What are the actions that improve performance for restructuring? There are a number of things you can do to improve performance related to database restructuring:

· If you change a dimension frequently, make it sparse. · Use incremental restructuring to control when Essbase performs a required database restructuring. · Select options when you save a modified outline that reduce the amount of restructuring required.

Which restructure operations are faster?

These types of restructure operations are listed from fastest to slowest:

· Outline only (no index or data files)· Sparse (only index files) · Dense (index files and data files) as a result of adding, deleting, or moving members and other operations · Dense (index and data files) as a result of changing a dense dimension to sparse or changing a sparse dimension to dense

What is Implicit Restructures? Essbase initiates an implicit restructure of the database files after an outline is changed using Outline Editor or Dimension Build. The type of restructure that is performed depends on the type of changes made to the outline

What is Explicit Restructures?

When you manually initiate a database restructure, you perform an explicit restructure. An explicit restructure forces a full restructure of the database. A full restructure comprises a dense restructure plus removal of empty blocks.

What is Dense restructure?

If a member of a dense dimension is moved, deleted, or added, Essbase restructures the blocks in the data files and creates new data files. When Essbase restructures the data blocks, it regenerates the index automatically so that index entries point to the new data blocks.

Empty blocks are not removed. Essbase marks all restructured blocks as dirty, so after a dense restructure you need to recalculate the database.

What is Sparse restructure?

If a member of a sparse dimension is moved, deleted, or added, Essbase restructures the index and creates new index files.

Restructuring the index is relatively fast; the amount of time required depends on the size of the index.

What is Outline-only restructure?

If a change affects only the database outline, Essbase does not restructure the index or data files.

Member name changes, creation of aliases, and dynamic calculation formula changes are examples of changes that affect only the database outline.

Dense Restructure Process

To perform a dense restructure, Essbase does the following:

1. Creates temporary files that are copies of the .ind, .pag, .otl, .esm, and .tct files. Each temporary file substitutes either N or U for the last character of the file extension, so the temporary file names are .inn, essxxxxx.inn, essxxxxx.pan, dbname.otn, dbname.esn, and dbname.tcu. 2. Reads the blocks from the database files copied in step 1, restructures the blocks in memory, and then stores them in the new temporary files. This step takes the most time. 3. Removes the database files copied in step 1, including .ind, .pag, .otl, .esm, and .tct files. 4. Renames the temporary files to the correct file names: .ind, .pag, .otl, .esm, and .tct.

Sparse Restructure Process

When Essbase does a sparse restructure (restructures just the index), it uses the following files:· essxxxxx.ind· dbname.otl· dbname.esm

Data compression

Essbase allows you to choose whether data blocks that are stored on disk are compressed, as well as which compression scheme to use. When data compression is enabled, Essbase compresses data blocks when it writes them out to disk. Essbase fully expands the compressed data blocks, including empty cells, when the blocks are swapped into the data cache.Generally, data compression optimizes storage use. You can check compression efficiency by checking the compression ratio statistic.

Types of data compression

Essbase provides several options for data compression:1. Bitmap compression, the default. Essbase stores only non-missing values and

uses a bitmapping scheme. A bitmap uses one bit for each cell in the data block, whether the cell value is missing or non-missing. When a data block is not compressed, Essbase uses 8 bytes to store every non-missing cell. In most cases, bitmap compression conserves disk space more efficiently. However, much depends on the configuration of the data.

2. Run-length encoding (RLE). Essbase compresses repetitive, consecutive values --any value that repeats three or more times consecutively, including zeros and #MISSING values. Each data value that is repeated three or more times uses 8 bytes plus a 16 byte repetition factor.

3. zlib compression. Essbase builds a data dictionary based on the actual data being compressed. This method is used in packages like PNG, Zip, and gzip. Generally, the more dense or heterogeneous the data is, the better zlib will compress it in comparison to bitmap or RLE compression.

4. Index Value Pair compression. Essbase applies this compression if the block density is less than 3%.Index Value Pair addresses compression on databases with larger block sizes, where the blocks are highly sparse. zlib does not use this.

5. No compression. Essbase does not compress data blocks when they are written to disk

RLE Compression Usage

When do you use RLE over Bitmap Compression? Use RLE over Bitmap When,

Average block density very low (< 3%).

Database has many consecutive repeating Values.

Data compression disable procedure

When to disable compression? You may want to disable data compression if blocks have very high density (90% or greater) and have few consecutive, repeating data values. Under these conditions, enabling compression consumes resources unnecessarily. Don't use compression if disc space/memory is not an issue compared to your application. It can become a drain on the processor.

Data locks

Essbase issues write (exclusive) locks for blocks that are created, updated, or deleted, and issues read (shared) locks for blocks that should be accessed but not modified. By issuing the appropriate locks, Essbase ensures that data changed by one operation cannot be corrupted by a concurrent update.

How can we display UDA's in reports? How do they impact report report performance.

Ans: UDA's values are never displayed in the reports and hence do not impact report performance.

How does Attribute dim impact report performance?

Ans: They highly impact the report performance as the attributes are calculated dynamically when referenced in the report. For very large number of att dim displayed in the report, the performance could drastically reduce.

While loading the data, you have applied both the selection criteria as well as rejection criteria to a same record. What will be the outcome.

Ans: The record will be rejected.

How is data stored in the Essbase database?

Ans: Essbase is an file based database where the data is stored in PAG files of 2 GB each and grows sequentially.

Index file

An index entry is comprised of the intersection of exactly one member from each sparse dimension

Page file

A 2Gig of an index entry and a block comprises a page file

Transaction

What is a transaction?

When a database is in read/write mode, Essbase considers every update request to the server (such as a data load, a calculation, or a statement in a calculation script) as a transaction.

Transaction control file

What is transaction control file?

Essbase tracks information about transactions in a transaction control file (dbname.tct).

The transaction control file contains an entry for each transaction and tracks the current state of each transaction (Active, Committed, or Aborted).

Isolation level &Types of isolation levels

What is isolation level and what are the types of isolation levels?

Isolation levels determine how Essbase commits data to disk. Essbase offers two isolation levels for transactions --committed access and uncommitted access (the default).

What is commited access?

When data is committed, it is taken from server memory and written to the database on disk. Essbase automatically commits data to disk. There are no explicit commands that users perform to commit data blocks.

Committed and Uncommitted access?

Committed:

Committed at the end of a transaction. Data retained till then.

All blocks in question locked.

Pre-Image Access: If enabled, Read only access allowed

Wait Times:

Indefinite

Immediate Access or no Wait

No. of Seconds Specified

Uncommitted:

Committed only at synchronization points.

Block by Block Locks.

Commit Row: No of rows of data loaded when Sync point occurs.

Commit Block: No. of Blocks Modified when Sync Point occurs.

For Rollback, Commit Row=0 and Commit Block=0

Advantages and disadvantages of using committed access

You can optimize data integrity by using committed access.

Setting the isolation level to committed access may increase memory and time requirements for database restructure.

Transaction that is always in committed mode

The Spreadsheet Add-in lock and Send and the Grid API are always in Committed Access Mode

What are the memory caches used by Essbase to coordinate memory usage? Essbase uses five memory caches to coordinate memory usage 1. Index Cache 2. Data File Cache 3. Data Cache 4. Calculator Cache 5. Dynamic Calculator Cache

Index cache

The index cache is a buffer in memory that holds index pages. How many index pages are in memory at one time depends upon the amount of memory allocated to the cache.

Data file cache

The data file cache is a buffer in memory that holds compressed data files (.pag files). Essbase allocates memory to the data file cache during data load, calculation, and retrieval operations, as needed. The data file cache is used only when direct I/O is in effect.

Data cache

The data cache is a buffer in memory that holds uncompressed data blocks. Essbase allocates memory to the data cache during data load, calculation, and retrieval operations, as needed.

Calculator cache

The calculator cache is a buffer in memory that Essbase uses to create and track data blocks during calculation operations.

Dynamic calculator cache

The dynamic calculator cache is a buffer in memory that Essbase uses to store all of the blocks needed for a calculation of a Dynamic Calc member in a dense dimension (for example, for a query).

Memory caches used by Essbase to coordinate memory usage

Essbase uses five memory caches to coordinate memory usage

Index Cache: Min -1024 KB (1048576 bytes) Default - Buffered I/O : 1024 KB (1048576 bytes);Direct I/O : 10240 KB (10485760 bytes) Opt -Combined size of all essn.ind files, if possible; as large as possible otherwise.Do not set this cache size higher than the total index size, as no performance improvement results.

Data File Cache: Min - Direct I/O: 10240 KB(10485760 bytes) Default -Direct I/O: 32768 KB(33554432 bytes) Opt -Combined size of allessn.pag files, if possible; otherwise as large as possible.This cache setting not used if Essbase is set to use buffered I/O.

Data Cache:Min - 3072 KB (3145728 bytes) Default - 3072 KB (3145728 bytes) Opt -0.125 * the value of data file cache size.

Calculator Cache:Min - 4 bytes Max: 200,000,000 bytes Default - 200,000 bytes Opt -The best size for the calculator cache depends on the number and density of the sparse dimensions in your outline. The optimum size of the calculator cache depends on the amount of memory the system has available.

Essbase alias tables provide a nice way for users in different countries, speaking different languages, to share and understand the same member names in their native tongue. This can be very valuable in gaining the user acceptance you need for your new cube since people will feel more comfortable with something they understand.

An Essbase cube may contain up to ten different alias tables so there's a lot of flexibility available. There are two ways to create alias tables:

1.Create an empty alias table and then populate the members manually

2.Import an alias table from a source file which contains the desired member names

1)How is data stored in the Essbase database?

Essbase is an file based database where the data is stored in PAG files of 2 GBeach and grows sequentially.

2)While loading the data, you have applied both the selection criteria as wellas rejection criteria to a same record. What will be the outcome?

The record will be rejected.

3)How does Attribute dim impact report performance?

They highly impact the report performance as the attributes are calculateddynamically when referenced in the report. For very large number of att dimdisplayed in the report, the performance could drastically reduce.

4)How can we display UDA's in reports? How do they impact report reportperformance?

A:UDA’s values are never displayed in the reports and hence do not impactreport performance.

5)What is TB First and TB Last?

A:TB First:

in the Sample.Basic database, the accounts member OpeningInventory is tagged as TB First. Opening Inventory consolidates the value of the firstmonth in each quarter and uses that value for that month’s parent. For example,the value for Qtr1 is the same as the value for Jan.

TB Last:

in the Sample.Basic database, the accounts member Ending Inventory istagged as TB Last. Ending Inventory consolidates the value for the last month ineach quarter and uses that value for that month’s parent. For example, the valuefor Qtr1 is the same as the value for Mar.

6)What are filters?

A:A method of controlling access to database cells in essbase. A filter is themost detailed level of security, allowing you to define varying access levels differentusers can have to individual database values.

7)What are different types of attributes?

Essbase supports two different types of attributes.

1. User-Defined attributes

2. Simple attributesUser-Defined attributes: The attributes that are defined by the user.Simple attributes: Essbase supports some attributes, they are: Boolean, date,number, and string.

8)How does Attribute dimensions and UDA's impact batch calculationperformance?

UDA’s- No Impact as they do not perform any inherent calculations. Attribute dim- No Impact as they perform only dynamic calculations.

9)What is the difference between UDA's and Attribute dimensions?

Attribute dimensions provides more flexibility than UDA’s. Attributecalculations dimensions which include five members with the default names sum,count, min, max and avg are automatically created for the attribute dimensions andare calculate dynamically.

10)Why do objects gets locked and when does this happens?

Objects gets locked to prevent users to make simultaneous and conflictingchanges to Essbase database objects. By default whenever an object is accessed through Aministrative services console or Excel spreadsheet add-in, it gets locked

11)What is the difference between classic and EPMA Applications.

The basic difference is that you would be able to share dimensions across applications through EPMA.If you have a Planning application and a HFM app. and they both use a same dimension, then you don't need to create the dimension twice in EPMA. You can share the dimension across both applications. 'Dimension Library' enables youto do that.

12)What is Dynamic Time Series in Hyperion Essbase?

This option is available only when working with Essbase data sources.

Dynamic Time Series members are predefined members used in to-date calculations. Dynamic Time Series members do not appear as members in your database outline; instead, they represent a generation in a Time dimension. For example, in the Essbase Sample Basic database, the Application Designer can create a generation name called Quarter for generation 2 of the Year dimension that contains the data for Qtr1, Qtr2, Qtr3, and Qtr4. When you create the generation name Quarter, Essbase creates and enables a Dynamic Time Series member called Q-T-D.

To use Dynamic Time Series in calculations, you first define the latest time period for which you want data. The latest time period is the level 0 member in a Time dimension. For example, in the Sample Basic database, the level 0 members are the months of the year: Jan, Feb, Mar, and so on. If the current month is August, and you want to know the sales data for the quarter up to the current month, Dynamic Time Series calculation gives you the sales data for the months of July and August.

http://docs.oracle.com/cd/E12825_01/epm.111/sv_user_help/frameset.htm?sel_dts.htm

13)What is a UDA? How is it used?

A UDA is a word or phrase about the dimension member that is associated with it as a characteristic.

How do you back up applications in Essbase?

- Back up application folder

- Back up security file, essbase.sec

- Back up configuration file, essbase.cfg

14)What is a back calc?

It is a second pass on a stored rate by a calculation script to fix that rate for quarters

15)What is the sign to assign a value in a calc script? Equal? Not equal?

=, ==, <>

16)What is the difference between @LEVMBRS and @RELATIVE

@LEVMBRS is used for dimensions, @RELATIVE is used for any parent

17)What is AGGMISSING in a calc script? What is the default? When do you want it off? When on?

-It aggregates missing values. The default is OFF. It’d better be on when loading zero level data. It’d better be off when loading higher level data.

18)What is implied share? What is the risk involved in using it? How do you avoid?

Implied share is when a parent inherits the value of its only rolling child. Essbase will not let the child value change if there is an implied share. Setting the parent to NEVER SHARE will avoid it.

19)Compare CREATENONMISSINGBLK vs CREATEBLOCKONEQ

CREATENONMISSINGBLK creates blocks for every possible block combination. CREATEBLOCKONEQ creates blocks only for the equations.

20)When do you use FIX vs IF in a calc script?

IF on DENSE, FIX on SPARSE

21)What is the default calculation order for a full consolidation?

Accounts, Time, Dense (outline order), Account if Sparse, Time if Sparse, Sparse (outline order)

22)How does calculation work for CALCDIM?

Calc dim will follow a full consolidation order if all dimensions are listed. It does not follow the order in the CALCIM formula.

23)How would you defrag a database and how would you avoid it?

Export and Import the load back, and Dense Restructure. To avoid it, on data loads, sort by the sparse dimensions, Calculate dense dimensions before sparse dimensions

24)What is “average clustering ration”? What ratio is better?

Closer to 1 is better. The higher ratio, the less fragmentation

25)What would this yield for Rebates?

Rebates (+) 50

Rebate Rate (*) $100

Units Sold (+) 50

26)What is the formula equivalent to the hierarchy below for the parent?

Parent -> (((( C1 - C2 ) - C3 ) * C4 ) / C5 )

C1 (-)

C2 (-)

C3 (-)

C4 (*)

C5 (/)

27)Is error files loadable?

Yes

28)What are SSAUDIT files? Are they loadable?

SSAUDIT files are used to track what has been locked and sent thru spreadsheets and planning. Yes they are loadable

29)Where can I find all the declaration of excel VBA commands for Essbase?

BIN/Text file

30)Where does the export file goes to?

Any drive you specify on the server itself

31)How does Essbase determine the order of dimensions in an export file?

Sparse dimensions in the order of outline and then the dense dimensions in the order of outline

32)What is the index file?

An index entry is comprised of the intersection of exactly one member from each sparse dimension

33)What is a page file?

2Gig of an index entry and a block comprises a page file

34)How do you determine the best Dense/Sparse Mix?

Larger dimensions tend to be Sparse, Rapidly changing dimensions tend to be Sparse, Calculation intensive dimensions are usually optimally set to Dense.

35)How can you revert a sign of data in a file?

Load rule (@UDA), or manually

36)What does metaread do in a security filter?

It changes the meta data users can see

37)How would you give a user to only open months in security?

Assign UDAs on closed months or open months and filter on those

38)What is intelligentcalc? What is the pros and cons? What is the default?

Calculation looks for data changes. If you modify the outline, Essbase does not recognize it as a change and do not calculate the data properly. The default is ON.

39) What is the optimum block size?

Less than 100K

40)What is the rule thumb for the optimum block density?

Higher the better

***41)When do you use FIX & IF Statements***

FIX statement is used for sparse dimension

FIX statements are scope restrictions (i.e. Only calculate this particular member)

IF statement is used for Dense Dimension

IF statements can be used in member formulas or calc scripts. IF statements are conditional logic(i.e. IF something is true do one thing otherwise do something else)

Reason: If you FIX on a Dense dimension. Remember dense dimension member will be present in every block of essbase. so if you FIX on dense dimension you are asking to pull all blocks, so the purpose of "FIX" (where you want to pull only some blocks) is lost. If you FIX on sparse dimensions, only required blocks are pulled and calculation will be fast.

42)In what situation I cannot use AGG MISSING ON setting?

In some cases you may need to load data into parent level. Like allocations, for example you load expense data into east region and allocate the expenses to various cost centers under east region based on the headcount or other factors.

If you have AGG MISSING ON and when we aggregate the database. it will clear the parent value if there are no data for children. In such cases we don't use AGGMISSING ON option.

43)Give me an estimate of the largest Essbase db you have worked on (Number of dims, members, calcs, etc)

In my past experience, the biggest databases for BSO Essbase databases were below 10GB in most cases less than 5 GB. And number of dimensions are 8 to 10 with maximum 4 attribute dimensions. In most cases account and period are dense dimensions. number of members are around 5000 for entities. Any dimension members are below 10000.

44)What is the major difference between CALCDIM and AGG commands?

CALCDIM will only rollup only thru one dimension or specified set of dimension and also calculates the formulae associated with the members AGG will roll up all dims ignoring formulae

45)What process do you follow when developing calc scripts?

FIX statement is used for sparse dimension

FIX statements are scope restrictions (i.e. Only calculate this particular member)

IF statement is used for Dense Dimension

IF statements can be used in member formulas or calc scripts. IF statements are conditional logic(i.e. IF something is true do one thing otherwise do something else)

Reason: If you FIX on a Dense dimension. Remember dense dimension member will be present in every block of essbase. so if you FIX on dense dimension you are asking to pull all blocks, so the purpose of "FIX" (where you want to pull only some blocks) is lost. If you FIX on sparse dimensions, only required blocks are pulled and calculation will be fast.

42)In what situation I cannot use AGG MISSING ON setting?

In some cases you may need to load data into parent level. Like allocations, for example you load expense data into east region and allocate the expenses to various cost centers under east region based on the headcount or other factors.

If you have AGG MISSING ON and when we aggregate the database. it will clear the parent value if there are no data for children. In such cases we don't use AGGMISSING ON option.

43)Give me an estimate of the largest Essbase db you have worked on (Number of dims, members, calcs, etc)

In my past experience, the biggest databases for BSO Essbase databases were below 10GB in most cases less than 5 GB. And number of dimensions are 8 to 10 with maximum 4 attribute dimensions. In most cases account and period are dense dimensions. number of members are around 5000 for entities. Any dimension members are below 10000.

44)What is the major difference between CALCDIM and AGG commands?

CALCDIM will only rollup only thru one dimension or specified set of dimension and also calculates the formulae associated with the members AGG will roll up all dims ignoring formulae

45)What process do you follow when developing calc scripts?

- Analyze the requirements documents.

- Decide whether it is required to write calc script or can we create formulae in the database outline.

- Write the calculation script in the incremental method i.e. calculating some members and test for optimization.

EPM 11.1.2.2 New features

Essbase

UNIFORMED NAMING CONVENTIONS UNC PATH

OPMN REPLACES THE HTTP SERVER FOR THE ESSBASE SERVER INSTALLATION

IMPROVED MDX FEATURES

OPTIMISED CALCULATION FUNCTIONS ENHANCEMENT OF BSO Cubes

NEW EAS LOG LEVELS

Smartview-

Supports 64 BIT OF MS OFFICE 2010

Smartview supports two new interfaces\ IT DISPLAYS 2 new ribbons HSF& Predictive Planning which works with oracle planning.

HFM FinaNcial close & disclosure management

HPC

FR

New FR print server is part of web application

No need of installing ghost scripts.

FR Port 8200

It gives the option of installing the framework services & RMI Services

RA Framework port 4500

RA Framework services port range 6800-6805

RA Framework services start port 6800 & RA Framework services end port 6805RMI PORT 8205-8209

APS13080

ODI AGENT PORT 20910

WORKSPACE

STRATEGIC WORK FORCE

,FR REPORTING COMPLIANCE,HPCM

ESSBASE STUDIO ,ESSBASE CLIENT

EPM Systen Default Ports

You can burst snapshots into an internal Workspace folder, but not PDF.

The challenge is to update the security for more than 2000 reports after each bursting. I had to find an automated way to do this rather than manually opening up each file and adding permission to each file.

There is a BatchDriver API which can upload files from a directory into Workspace. So I combined the option of bursting PDF files into an external directory and then used the BatchDriver API to upload the files into Hyperion Workspace with user permissions.

You can use this file to create the Control File for batch uploading.

Please follow the below given steps to upload file into Workspace

Fill in External folder Path (as per FRConfig.cmd Exportfolder definition)

Fill in Workspace Folder Name (this is the PDF upload location)Fill in Workspace Folder Name, where the bursting report/book is located

Fill in financial_reporting_object_name separator used in bursting file

Mention whether Hyperion version is pre 11.1.2.1

Tagging of pre 11.1.2.1 is used to convert spaces to '+'.

Client Requirements -- A Comparative View Between Oracle Hyperion

Financial Management ( HFM ) and an ERP / GL Approach

| Client Requirements | HFM | ERP / GL |

| A user environment that is out of the box and is easy to create including navigation from a single web page | Oracle Hyperion Financial Management includes Workspaces which does not require any complex web programming languages to set up. Setting up rows, columns, scaling, suppression, etc is easy for end users. | ERPs usually does not include any out of the box functionality and requires customization and web programming expertise to create a similar end-user experience. |

| Process workflow and documentation management that supports Sarbanes Oxley requirements | Oracle Hyperion Financial Management has a Process Management feature that includes approve and reject cycle management, as well as, documentation management. This process can be set up and maintained by the finance department and it will help support Sarbanes Oxley requirements. | Any workflow and preventive controls in ERPs require IT programming expertise to set up and maintain. Maintaining documentation is not an option in ERPs |

| Real-time reporting against consolidated results | The HFM application server tier is also the data source. Therefore, there is no need to push consolidated results to another repository to make the results available for reporting enabling real-time reporting against consolidated results. | ERPs would require IT to maintain multiple repositories which would require extra maintenance, extra calculation time, and could cause confusion about which numbers are correct. |

| An easy to use report writer plus a dashboarding tool for graphical navigation and ad-hoc drill and pivot. | Hyperion Financial Reporting or Hyperion Web Analysis do not require any programming expertise to build financial reports. Smartview allows end users to easily create ad hoc reports in Excel. All tools are used and maintained by end-users in the finance and accounting department. | ERPs tend to offers generic BI reporting that is built for technical IT users, not for the finance and accounting department. ERPs reporting can be cumbersome. ERPs does not have an ad hoc reporting tool. New report requests have to be built by someone with IT experience. |

| Out of the box financial intelligence | Oracle Hyperion Financial Managementhas many pre-built features for financial consolidation and reporting including currency translation, intercompany eliminations, minority interest, and a journal entry module for auditable top-side adjustments to name a few. | ERPs have a degree of pre-built functionality, but there is significantly more building to do to accomplish basic consolidation functionality. |

| Smart dimensionality of metadata | Oracle Hyperion Financial Managementhas many pre-built features for financial consolidation and reporting including currency translation, intercompany eliminations, minority interest, and a journal entry module for auditable top-side adjustments to name a few. | ERPs have a degree of pre-built functionality, but there is significantly more building to do to accomplish basic consolidation functionality |

| Supporting detail and documentation for SOX controls as part of the close cycle | Oracle Hyperion Financial Managementsupports line-item details, cell text, and document attachments (Word, Excel, PDFs etc) which can be attached in data or workflow to support and improve the audit trail. | ERPs does not support line-item detail or document attachments though there is support for Cell text. |

| Microsoft Office support and integration | Oracle Hyperion Financial Managementincludes the MS Office (Word, Excel, PowerPoint, etc) add-in Smartview that allows users to create ad hoc reporting, in addition to, giving the users the ability to submit, consolidate, translate and calculate data. The Excel add-in supports ad-hoc drill/pivot or formulas-based retrieval. | Some ERPs only offers an Excel based add-in with no ability to interact with other MS Office applications. The OCH add-in is read-only not allowing the user to write-back to the OCH application, perform calculations and consolidations or display the consolidation status. |

| Easy data translation and loading that provides visibility into the mapping processes | Oracle Hyperion Financial Managementoffers three options including: Hyperion Financial Data Quality Management (FDM) , Hyperion Data Integration Management (DIM), and Oracle Data Integrator (ODI). FDM allows complete visibility into the mapping process and has leading integrated SOX controls and processes built in. FDM is built for the finance team. | Some ERPs only offers one ETL-based solution which requires significant IT support to build and maintain. |

| Visibility into Intercompany matching and elimination | Oracle Hyperion Financial Managementoffers an out of the box intercompany matching feature that can match and eliminate intercompany balances easily across multiple sources. For even more detail, HFM offers the Intercompany Transaction Module for transaction level matching across multiple sources. | ERPs does not offer a multi-source or multi-ERPs instance intercompany balance or transaction matching and elimination feature. It only offers a single instance ERP solution. |

| Audit trail capability | Oracle Hyperion Financial Managementoffers task/audit trail capabilities by documenting who did what and when they did it. In addition, HFM offers a complete history of how a data cell changed over time. | ERP provides audit trail capability as to who changed what and when they did it. However, ERP does not offer anything more comprehensive, such as, cell level tracking. |

| Visibility into managing and monitoring the system | Oracle Hyperion Financial Managementprovides an Administrative Console allowing administrators and end users the ability to monitor peak-period activity, see which users are running which tasks, and even stop tasks mid-stream if required giving the finance department complete visibility to manage and control. | ERP does not provide any system monitoring capability for finance end users. IT has the ability to monitor the system at the server level but it provides only limited visibility. |

| Flexible, role-based security | Oracle Hyperion Financial Managementhas a built in Security Module giving the HFM administrator the ability to address difficult segregation of duties issues and requirements. Security in HFM is setup and maintained by the HFM administrator/Finance department. | ERPs are not as easy to set up or manage. There are many different security profiles that need to be set up and maintained (i.e. at the foundation level, at the reporting level, etc). Security is set-up and managed by IT. |

| Formalized application change management to satisfy SOX requirements | Oracle Hyperion Financial Managementmetadata changes are tracked and monitored giving the HFM administrator visibility into how the metadata has changed over time and who did what and when they did it. In addition, HFM applications can be easily created, maintained, and copied within the same server environment. | ERPs were not developed based on the concept of “applications” that can be maintained independently and compared to each other within the same server environment. ERP has the concept of “instances” which are limited to a single instance per server environment. |

| Integration with other Hyperion products | Integration between Hyperion products, as well as, with external systems including ERP solutions is possible by utilizing tools, such as, Hyperion EPM Architect, Hyperion Data Relationship Management, and FDM allowing for a complete and fully integrated financial analysis solution. | ERPs typically are not integrated with other Hyperion products like Hyperion Planning. |

| Automated Cash Flow | 80-90% of requirements can be automated typically. 100% can be reported out of HFM. | Not available |

| Financed owned application | HFM and FDM are developed and maintained by the Finance and Accounting department. The HFM administrator is typically an individual with a finance background that is comfortable working with technology. | OCH is developed and maintained by IT resources. The Finance and Accounting departments must communicate with the IT team any requirements and changes to the application. |

| Easy integration of new entities (acquisitions, mergers, etc) | Oracle Hyperion Financial Managementallows for the easy integration of an acquired entity before that entity is integrated into the ERP system reducing the risk an acquisition might have on the financial close. | Not available |

Assessment -- Choosing Between Oracle Hyperion Financial Management and ERP

| Assessment | HFM |

ERP / GL